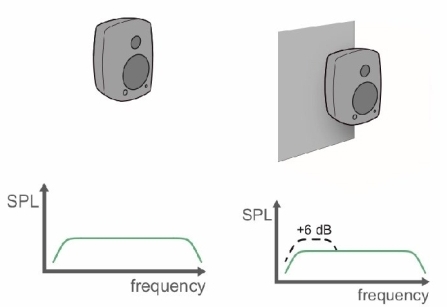

Baffle Step as a Pressure Director, Not Booster:

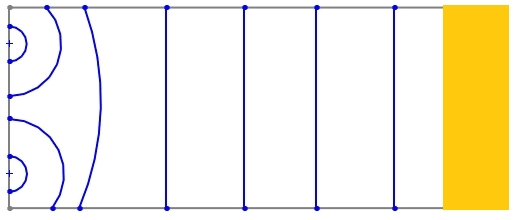

While baffle step doesn’t create an overall gain in sound pressure, it acts like a pressure director, concentrating sound waves in the forward direction (towards the listener) for frequencies above the baffle step frequency. This can be visualized as:

At low frequencies the sound wavelength is large compared to the baffle size:

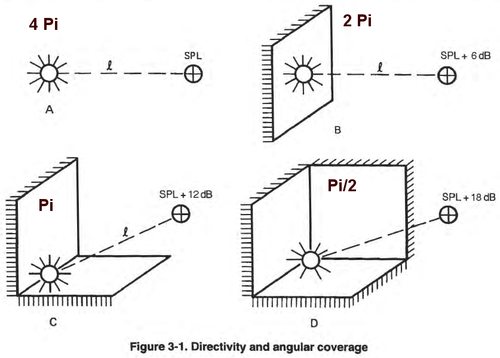

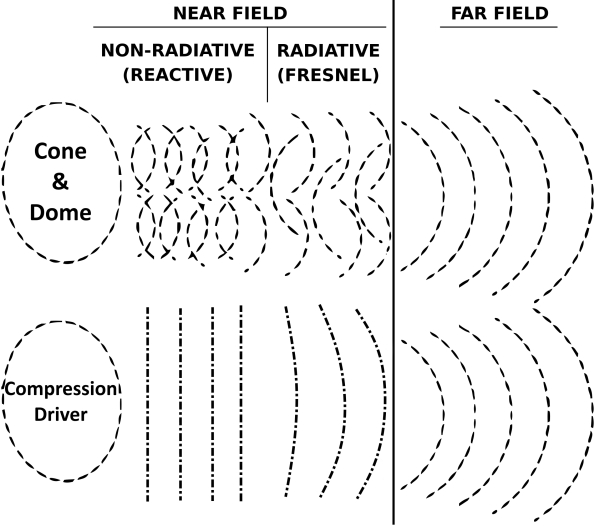

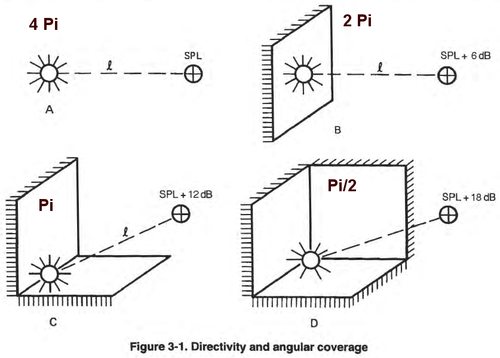

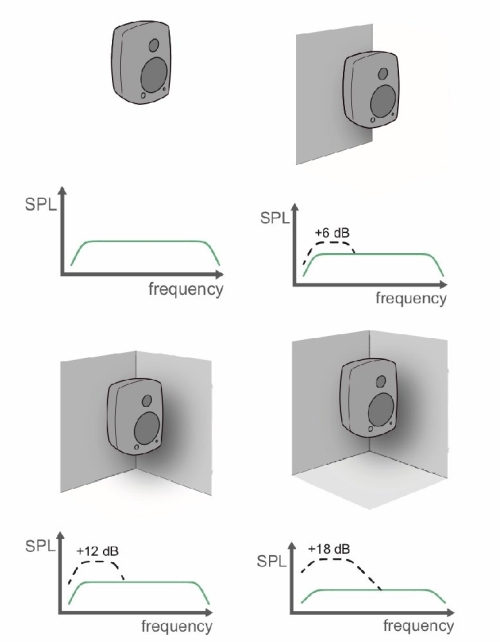

Sound from the driver radiates freely in all directions (think of a sphere),resulting in omnidirectional radiation (4π steradians).

When frequency increases and the wavelength becomes comparable to the baffle dimensions:

-

The cabinet dimensions start to influence the sound radiation pattern and pass the radiation to 4π to 2π leading to a pressure increase in the forward direction due to a more focused radiation pattern (generaly +6dB SPL).

-

Diffraction around the edges creates additional wavefronts at transition from 4π to 2π that interfere with the original waves from the driver. These interferences will causes cancellations or sommations at transition. Diffraction and Standing Waves article goes deep in these two concepts.

This combined effect leads to a global pressure increase in the forward axis from a frequency related to baffle dimensions and a beam of sound at transition.

The Energy point of view

While the baffle analogy is helpful, it’s important to remember that baffle step doesn’t create a uniform gain of +6dB SPL.

We take here as landmark the dB SPL (so in front of the speaker) in 4π, some parameters as woofer sensitivity are given in 2π on a big ISO baffle, so from this point of view, if we take SPL in 2π as landmark it’s no more +6dB SPL but -6dB SPL.

This difference of front pressure is in fact more a 6dB delta due to a difference in energy distribution than a plus or minus, to the energy point of view there is no loss or gain, the energy remain the same, only the his direction is changing, creating a energy focusing effect.

How to simulate it

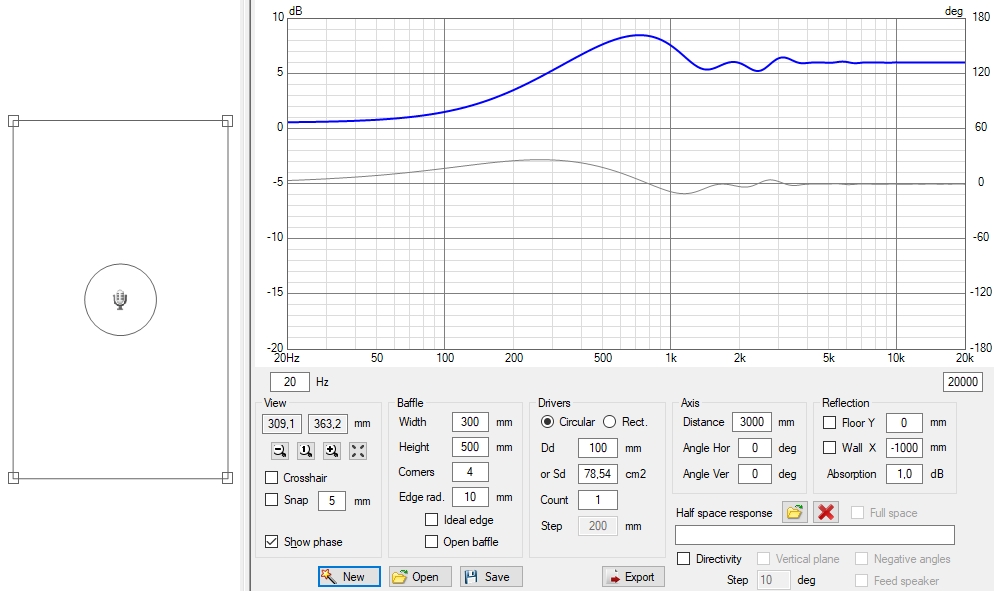

The pressure increase in the forward direction is gradual and depends on the specific baffle size and frequency, as we can simulate it in VituixCAD with the Diffraction tool:

Analogy, Baffle Step as a 180-degree Horn:

The analogy of a 180-degree horn is apt. Similar to a horn, the baffle acts to channel and focus sound waves in a specific direction. However, unlike a true horn that uses its shape to progressively increase pressure by loading and acoustic loading, the baffle doesn’t create new energy. It simply redirects existing energy.

Addressing the Decrease in Other Directions:

The pressure increase in the forward direction comes at the expense of sound pressure radiating to the sides and back. This decrease happens because:

-

The diffracted waves also travel sideways and backwards, but they might not be perfectly in phase with the original waves, leading to destructive interference and a decrease in pressure in those directions.

-

The reduction in radiation area from 4π steradians (omnidirectional) to 2π steradians (forward direction) inherently represents a increased sound pressure in a spedific direction, to the listener, so it will be attached to a decrease sound pressure off axis.

Analogy, The Water Fountain:

Imagine your speaker as a water fountain:

-

At low frequencies: The water sprays out in all directions, like a big sphere (think of a beach ball filled with water spraying everywhere). This is similar to how sound behaves at low frequencies from a speaker without a baffle step effect.

-

As the water pressure increases (higher frequencies): The water starts to travel further, but the edges of the fountain (like the edges of the speaker cabinet) restrict the spray. This makes the water shoot out more in the forward direction (towards you) and less to the sides and back.

This is similar to what happens with baffle step:

-

Higher frequencies are “focused” more towards the listener. This can sound a little louder in the front compared to the sides, especially for sounds just below the baffle step frequency.

-

However, the total amount of sound energy doesn’t necessarily change. It’s just distributed differently, with more emphasis on the front.

Baffle mistakes and solutions

For tweeter without waveguide, put it in an asymetrical position in the baffle is a good solution for dispatch the baffle diffraction (beaming), but by keeping the medium (if there is one) perfectly vertical to the tweeter.

The worst baffle step is given by “vintage” way to do, provoking a lot of diffraction impacting both on and off-axis:

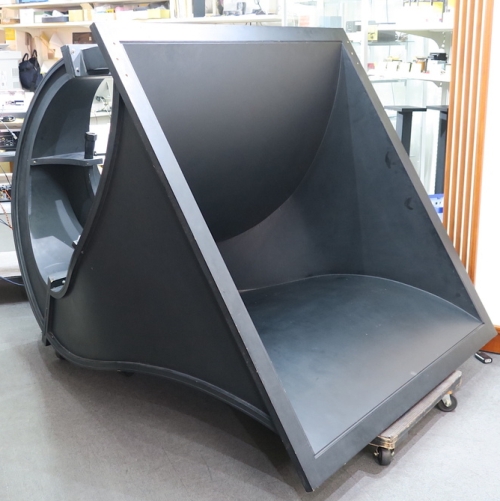

As seen in the midrange narrowing article, a big baffle will not necessarily solve the problem with a horn, as we have to pass from the profile acceleration of the horn to a 180° flat no acceleration surface, we have to accelerate then decelerate slowly for reach the big flat surface (it can be a wall in in-wall). The size of the panel, if he is not infinite (wall), will just put the problem further to the point of view of edge diffraction.

For waveguide or horns it’s always better to have an continuous profile to never create a flat area between the horn and the edges, as seen here with a X-shape X25:

Additional Considerations:

-

The specific frequency response of the baffle step depends on the size and shape of the cabinet. A wider baffle will have a lower baffle step frequency compared to a narrower one.

-

The proximity of the speaker to walls and other boundaries can further complicate the pressure distribution due to additional reflections.

By understanding the interplay between diffraction, constructive interference, and radiation patterns, we gain a deeper appreciation for the complexities of baffle step and its impact on speaker performance.

This text delves into how ITDG (Initial Time Delay Gap), EDT (Early Decay), critical distance, radiation coverage, and speaker directivity all influence the perception of space and sound clarity within a real room, focusing on aspects relevant to living rooms and home theaters.

Understanding ITDG

ITDG refers to the time difference between the arrival of the direct sound from a source (speaker, instrument, voice, etc.) and the arrival of the first significant reflections of that sound bouncing off the walls, ceiling, and floor.

ITDG and the Perception of Clarity

A room’s ITDG plays a crucial role in how clear the sound feels. Here’s the impact:

-

Short ITDG (less than 20ms): While a very short ITDG can enhance clarity to some extent, it can also create an unnaturally dry and sterile sound, lacking the richness of natural reverberation.

-

Optimal ITDG Range (20ms - 50ms): This range is generally considered ideal for good clarity in rooms like living rooms and home theaters. Early reflections arrive soon enough to reinforce the direct sound and improve intelligibility, but not so soon that they create an overly dry or “in-your-face” listening experience.

-

Long ITDG (over 50ms): As you pointed out, longer ITDGs can lead to a decrease in clarity by creating noticeable echoes and muddying the sound. This can be particularly detrimental for speech intelligibility, especially in dialogue-heavy content.

Note: range and value can vary from one author or research to another and about preferences.

Critical Distance and Radiation Coverage

-

Critical Distance: This is the distance from a sound source where the direct sound and the first reflections arrive at the listener’s ears with roughly equal intensity. Within this distance, the ITDG has a significant impact on perceived clarity. Beyond the critical distance, the influence of the room’s reflections becomes less prominent.

-

Radiation Coverage: This refers to the area effectively covered by a speaker’s sound dispersion pattern. It’s important to consider the radiation coverage of your speakers in relation to the listening distance and room shape.

Relationship to ITDG and Directivity

-

Speaker Directivity: Speakers with a wider directivity pattern (less focused sound dispersion) tend to create a larger critical distance. This means that ITDG plays a more significant role in shaping the perceived clarity at greater listening distances.

-

Adapting Radiation Coverage: By strategically placing speakers with appropriate constant directivity speakers adapted to listening distance, you can influence the ITDG experienced within the room..

Early Decay Time (EDT) and its Impact

EDT is the time it takes for the sound level in a room to decrease by 60 dB after the source has stopped emitting sound. This decay is primarily caused by the absorption of sound by the room’s surfaces (walls, ceiling, floor, furniture, acoustics, etc.).

The Relationship Between EDT and ITDG

While ITDG is influenced by the room’s shape, size, and the placement of sound sources and listeners, the absorption characteristics of the room (reflected in the EDT) also play a significant role:

-

High Absorption, Short EDT: If a room has a high absorption coefficient (meaning it absorbs sound waves efficiently), the first reflections will arrive weaker and decay more rapidly. This can lead to a shorter ITDG because the gap between the direct sound and the remaining reflections becomes smaller.

-

Low Absorption, Long EDT: Conversely, in a room with low absorption (meaning sound waves reflect more readily), the first reflections will be stronger and take longer to decay. This can contribute to a longer ITDG as the first significant reflection arrives later, increasing the time difference between it and the direct sound.

Optimizing for Clarity: Balancing ITDG and EDT

When aiming for good acoustics in a living room or home theater, it’s important to consider both ITDG and EDT:

-

Balancing ITDG: As discussed previously, an optimal ITDG range (around 20ms-50ms) is desirable for clear sound.

-

Managing EDT: A moderate EDT (around 0.5s-1.0s for living rooms and home theaters) helps control unwanted reverberation without creating an overly dry and sterile sound.

Optimizing Your Room’s Acoustics

When optimizing ITDG and sound clarity in your living room or home theater, consider these points:

When aiming for optimal Initial Time Delay Gap (ITDG) and sound clarity in your living room or home theater, consider these key factors:

-

Speaker Selection: Choose speakers with directivity patterns that suit your listening distance and room acoustics. Wider directivity create a larger critical distance (where direct sound and early reflections have similar intensity), influencing how ITDG affects perceived clarity, especially at greater distances.

-

Strategic Speaker Placement: Strategically position speakers based on their directivity and desired ITDG. Avoid placing them too close to side walls, as this can negatively impact sound quality.

While a slight “toe-in” angle can sometimes improve focus at larger listening distances, use it cautiously. Excessive toe-in narrows the “sweet spot” and can reduce overall clarity. This is because it increases directivity too much, leading to tonal imbalance. Additionally, over-toe-in cannot compensate for a large variation in Direct-to-Reverberant Energy Ratio (DI, for Directivity Index).

-

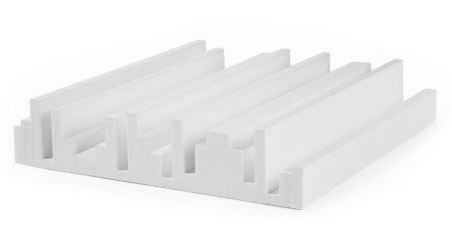

Absorption Acoustic Treatment: Utilize sound-absorbing materials like acoustic panels, thick curtains, or carpets on walls and ceilings. This helps soak up unwanted reflections, shorten ITDG, and improve overall clarity.

-

Diffusion Acoustic Treatment: Consider incorporating diffusers to scatter sound more evenly throughout the room. This can reduce the impact of strong direct reflections and further enhance overall clarity.

In most cases, proper acoustic treatment combined with speakers that offer constant directivity with a coverage adapted to your listening distance, oriented to the central listening position, will provide the more effective solution.

Constant Directivity

While constant directivity speakers (those that radiate sound uniformly across frequencies) can offer some advantages:

Consistent ITDG: Constant directivity speakers can help maintain a more consistent ITDG across the listening area, regardless of the frequency content of the sound. This can be beneficial for achieving a more uniform and predictable sound experience.

C20 and C50 Integration Times Explained:

The Time Period of Integration (TPI) represents the time window during which our auditory system fuses sound reflections with the direct sound, perceiving them as a single event rather than distinct echoes. This “fusion effect” is crucial for spatial perception and clarity. The TPI varies with frequency, distance, and other factors such as sound complexity, listening environment, and listener age. To understand how TPI influences our perception and room acoustics, see the detailed explanation at Critical Distance — Time Period of Integration (TPI).

In the context of EDT measurement using the exponential sine sweep method, C20 and C50 represent different integration times used when calculating the decay curve. These integration times essentially filter the measured data to focus on specific frequency ranges:

-

C20: This integration time emphasizes the decay of mid-range frequencies (around 200 Hz to 4 kHz) which are particularly important for speech intelligibility.

-

C50: This integration time considers a broader frequency range (around 50 Hz to 10 kHz) and provides a more holistic picture of the room’s overall reverberation characteristics.

By analyzing the decay curve using both C20 and C50 integration times, acoustic professionals can gain a more comprehensive understanding of the room’s absorption properties at different frequencies, which ultimately influences both ITDG and overall sound clarity.

Conclusion

By understanding ITDG, critical distance, radiation coverage, and speaker directivity, you can achieve a more comprehensive approach to optimizing the acoustics in your living room or home theater. By considering these factors alongside the previously mentioned techniques, you can create a space with clear, well-balanced sound that enhances your listening experience.

What is Critical Distance ?

In sound reproduction, achieving a balanced listening experience depends on the interaction between the direct sound from the speakers and the reflected sound bouncing around the room (often referred to as the diffuse field).

This ideal balance is often described as a 50/50 ratio of direct to reverberated sound. However, this is a guideline, and preferences can vary depending on the music and your personal taste, with some favoring a 60/40 or 40/60 mix.

The critical distance is the distance from the speakers where the direct and reflected sound levels become roughly equal. It’s influenced by some key factors:

-

Room Acoustics (Sabine Absorption): Sabine absorption refers to the total amount of sound energy absorbed in a room, calculated using the Sabine equation, which relates reverberation time to the room’s volume and absorption properties. Higher absorption reduces reverberation, while lower absorption increases it.

Mathematically, rooms with lower absorption (more reflective surfaces, low alpha sabine) should have a longer critical distance, as the direct sound needs to travel farther before reaching a 50/50 balance with the reverberant field.

However, in practice, the opposite effect is often observed because the reverberant field is primarily sustained by sidewall reflections, while absorption is typically concentrated on the front and/or rear walls and not on side wall to preserve the Listener envelopment, altering the distribution of reflections and increasing the effective critical distance.

-

Speaker Directivity Factor: Speakers with a wider dispersion pattern (spreading sound over a larger area) generally have a shorter critical distance when a narrower ones has a longer one. This is because the off-axis sound increasing sound energy by bouncing off walls and other surfaces.

-

Wider Dispersion: Speakers with a wider dispersion pattern (spreading sound over a larger area) generally have a shorter critical distance. This is because they excite a larger portion of the room with off-axis direct sound, leading to a higher overall level of sound energy reaching the listener even at closer distances. However, these speakers also tend to contribute more to the reverberated field due to the increased sound energy bouncing off walls and other surfaces.

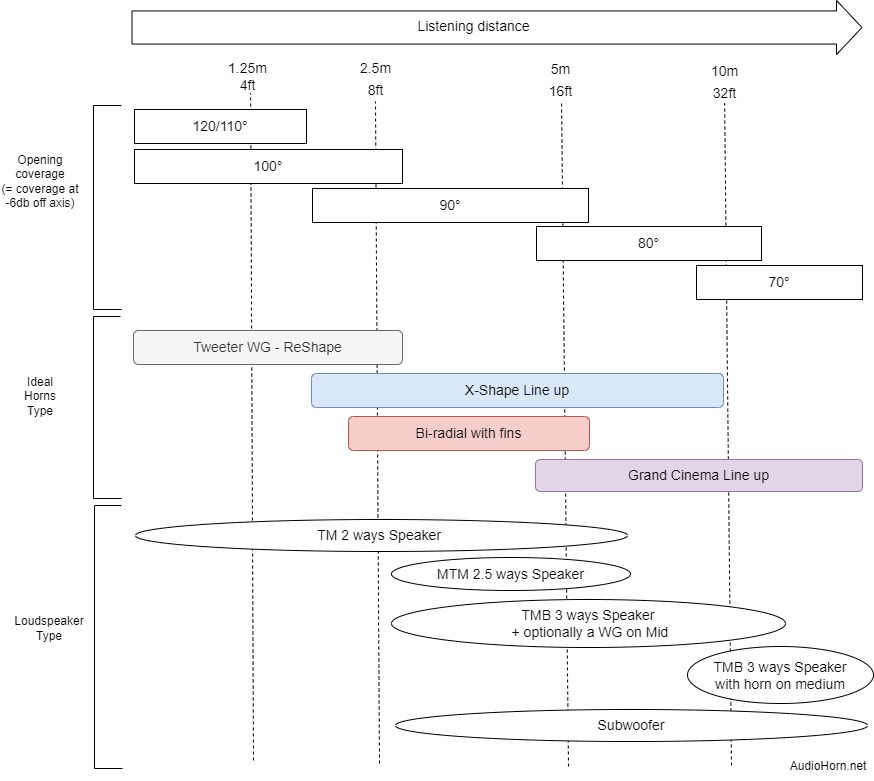

Generally 90° constant directivity horn are adapted from 2/2m50 to 5/6m. -

Narrower Dispersion: In contrast, speakers with a narrower dispersion pattern focus sound energy within a more concentrated cone. This means that a smaller portion of the room is directly excited, requiring a longer listening distance to achieve a similar level of sound energy compared to wider dispersion speakers. While they contribute less to the overall reverberated sound due to the focused sound direction, achieving a balanced sound experience with these speakers might necessitate sitting further away.

Generally 80/70° constant directivity horn are used after 5/6m.

-

Additional Considerations:

-

Frequency and Directivity: The balance between direct and reverberated sound can also vary with frequency due to speaker directivity. Speakers with constant directivity help to maintain a more consistent balance across the listening area.

-

Speaker Placement: While not directly related to critical distance, proper speaker placement can enhance the sound experience. Avoid placing the listening position (sofa) directly against the wall. This ensures the listener isn’t too close to the wall, affecting the ideal balance of direct and reflected sound. Additionally, maintaining some space between the seating area and the walls promotes better sound dispersion throughout the room. Angling the speakers slightly towards the listening area can create a more focused and engaging soundstage.

The formula can be found in Appendix A.

Why Respect It?

Respecting critical distance, along with using constant directivity devices and proper acoustic treatment when possible, allows us to achieve an optimal sound balance in the listening room. This translates to several benefits:

More Neutral and Faithful Sound: By achieving a balanced mix of direct and reflected sound, we hear the audio closer to how it was intended to be heard by the artist or sound engineer. The sound becomes more natural and accurate, avoiding unwanted coloration.

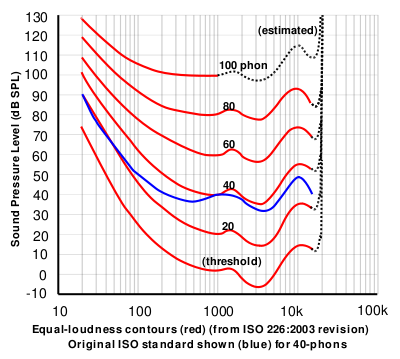

Increased Listening Volume Without Fatigue: Our hearing is generally more sensitive and find their limit at the most sensitive part of our ears. When the critical distance is not respected, especially in rooms with low absorption, reflections can cause an excess of high-frequency energy. This creates a fatiguing and harsh sound, forcing us to listen at lower volumes.

By adapting directivity to our listening distance, we can achieve two key benefits:

-

Controlled High Frequencies: The speaker’s directivity helps focus high-frequency sound towards the listener, reducing uncontrolled reflections and preventing high frequencies from becoming harsh or fatiguing. This targeted approach allows for a more balanced energy spectrum across all frequencies. The highs won’t overpower the lows, resulting in a more natural,faithful and detailed listening experience.

-

Improved Lower Frequency Presence and higher volume listening: With a balanced energy spectrum, we can listen at higher volumes without experiencing ear fatigue caused by excessive high frequencies, critical distance plays a role in how we perceive the low frequencies in comparaison to the high ones when they are controlled according to listening distance and with constant directivity behavior.

When the balance is right, the low frequencies will have a stronger presence without overpowering the rest of the sound.

In simpler terms, respecting critical distance helps us achieve a more natural, balanced, and enjoyable listening experience at higher volumes without listener fatigue.

Modern Speaker Design: Beyond the 120-Degree Coverage Myth

Nowadays, many loudspeakers prioritize achieving a strict 120-degree sound dispersion (directivity) across all frequencies.

However, this “one-120°-coverage-fits-all” approach ignores psychoacoustics (how we hear) and the great impact of listening distance.

In most rooms, a 90-degree horizontal constant directivity provides a more even distribution of sound energy, creating a well-balanced listening experience.

This approach recognizes the concept of critical distance. Conversely, 120 degree dispersion in a typical listening environment will excite the sidewalls too much (creating too much reflected sound) and will make the sound less and less balanced and more and more aggressive the further we go away to the speakers.

Here is a guide for listening distance and type of horn with coverage (most of our horns are availables in several coverage) we can use:

Direct, Reverberated Field and Modal regime

Understanding the behavior of sound waves in a room is crucial for achieving a good listening experience. Here, we’ll differentiate between two key concepts and explore some additional factors influencing low-frequency response:

Direct and Reverberated Field

-

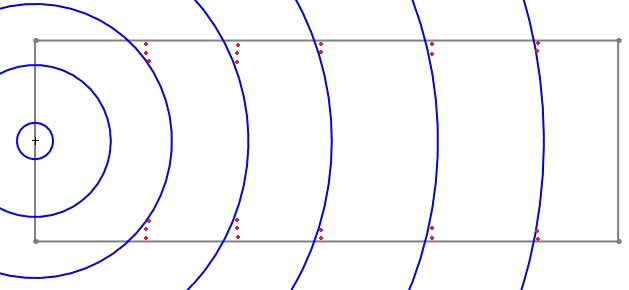

Direct Field: This refers to the sound that travels directly from the source (speakers) to the listener without any reflections from walls or other surfaces. The intensity of the direct field decreases with distance following the inverse square law, meaning it weakens by 6 dB for each doubling of distance. This is why sound gets progressively quieter as you move further away from the speakers.

-

Diffuse or Reverberated Field: A diffuse sound field is one that is homogeneous and isotropic (exhibits the same properties in all directions) throughout the volume of a room at any given time. It is made up of an infinite number of plane waves propagating in all directions. There are no major room-related anomalies to be observed in the measurements. Unlike the direct field, the diffuse field level is considered relatively constant within the listening area. This is because the sound has reflected numerous times and reached the listener from various directions, averaging out any distance-related variations.

It’s important to note: While the diffuse field is considered relatively constant within the listening zone, the overall sound pressure level (SPL) in a room, including the contribution from the diffuse field, will still decrease with distance from the speakers following the inverse square law. This decrease is primarily caused by the direct sound attenuating with distance, while the diffuse field level remains more stable within the listening area.

Modal Regime and Modes:

In addition to the concepts above, two other factors significantly influence how sound behaves in a room, particularly at lower frequencies:

-

Modal Regime: This refers to a situation where reflections are very important but localized and resonate at specific frequencies. These localized reflections coexist with absorptions at nearby frequencies, leading to a chaotic response, especially noticeable in the low frequencies (30-80Hz) of typical living rooms.

-

Modes: These chaotic responses manifest as large peaks and dips (typically +/- 15dB) in the sound pressure level at specific frequencies. They arise because the room dimensions create resonances where sound waves are reflected back and forth, reinforcing certain frequencies and cancelling others.

The consequence of these modes is the creation of resonance frequencies and a type of reverberation that’s specific to the room itself. Even small changes in speaker position can significantly alter this response.

Schroeder Frequency:

The Schroeder frequency is a critical transition zone, often with a wide range, that separates the modal regime from the diffuse field. Below the Schroeder frequency, the modal regime dominates, leading to the chaotic response described earlier. Above the Schroeder frequency, the diffuse field becomes more prominent, with sound reflecting more randomly throughout the room and creating a smoother response.

Room Size and Schroeder Frequency: The size of the room significantly impacts the Schroeder frequency. Larger rooms have a lower Schroeder frequency. In very large rooms, the Schroeder frequency can even fall outside the audible range of human hearing. This means that even low frequencies will exhibit a more diffuse character, which is often considered ideal for listening experiences. In these large rooms, the density of modes in the low frequencies becomes high enough to create a diffuse response.

The formula can be found in Appendix B.

Time Period of Integration (TPI), the brain interpretation:

The time period of integration (TPI) is the time window within which our auditory system perceives sound reflections as part of the direct sound rather than distinct echoes. This phenomenon is known as the “fusion effect.”

- If a reflection (Diffuse Field) arrives within a certain time delay (TPI), it merges with the direct sound, creating a single auditory event.

- If the delay exceeds the TPI, the reflection is perceived as a separate echo.

The TPI depends on several factors:

- Frequency: TPI is generally longer at lower frequencies and shorter at higher frequencies.

- Distance: The TPI increases with the distance between the sound source and the listener.

- Head size: The TPI is slightly affected by the size of the listener’s head.

Additional Factors:

The TPI is influenced by various factors beyond the distance and frequency:

- Sound type: Complex sounds like speech have longer TPIs than pure tones.

- Listening environment: TPI can be longer in noisy environments.

- Listener’s age: TPI tends to increase with age.

- Level difference between direct sound and reflections: Higher level differences lead to shorter TPIs.

Thévenot’s Fusion Curve:

This curve illustrates the relationship between the TPI and the level difference between the direct sound and its reflection.

A higher level difference allows for a longer TPI, meaning that reflections can arrive later without being perceived as echoes.

The formula can be found in Appendix C.

Haas Effect

The Haas effect is a psychoacoustic phenomenon related to the TPI.

If two identical sounds arrive very close together (under 35 milliseconds), our brains perceive them as a single sound coming from the direction of the first sound to arrive.

This allows sound engineers to increase sound level in the back of a room without affecting perceived sound direction by using delaying signal sent to a relay speaker.

In room accoustics in our reverberated field the early reflection on the near wall can create another “virtual” source and enter in Haas effect, it can be negative if the amplitude of this virtual source is too high.

Conclusion and additional considerations:

The critical distance formula is most accurate in ideal conditions with a well-established diffuse sound field (even distribution of sound throughout the room). In real rooms, this might not always be the case.

The formula is also only strictly valid if the listening position is directly on the speaker axis.

There’s no one-size-fits-all solution. Experiment, consider the factors mentioned, and personalize your listening experience, there is no golden rule because every room acoustics is different, but we often see:

- 90° constant directivity horn: 2/2m50 to 5/6m

- 80/70° constant directivity horn: 5/6m and more

- Line array: very long distance outdoors (large festivals, no obstavle), the distance between the center of the first speaker and the center of the last speaker must be equal to or greater than the wavelength of the lowest frequency reproduced, knowing that 80hz is a wavelength of 4m25.

The main goal of line array is in fact to maintain SPL for everybody at distance, nor really the directivity.

Appendix A: Calculating Your Ideal Listening Distance

Experiment with different listening distances to find the sweet spot that sounds best to you.

Consider the size of your room and the type of speakers you have.

Take into account your personal preferences for a more direct or spacious sound.

Critical Distance Formula:

The critical distance, which is the theoretical distance for achieving a 50/50 balance, can be calculated using the following formula:

dc = √(αQ / 50)

Where:

dc is the critical distance in meters

α is the Sabine absorption equivalent in m²/s (explained below)

Q is the directivity factor of the speaker (a value representing how the speaker spreads sound)

Directivity factor represents the speaker’s tendency to focus sound in specific directions compared to an ideal omnidirectional source radiating sound equally in all directions.

High Q: Indicates a more directional speaker, concentrating sound energy in a narrower beam.

Low Q: Indicates a more omnidirectional speaker, radiating sound more evenly.

Understanding Q is crucial in the formula as it influences the critical distance (dc):

Higher Q (more directional): Leads to a greater critical distance. This means you can sit further away from the speaker and still maintain a good balance between direct and reflected sound (50/50 ratio).

Lower Q (less directional): Results in a shorter critical distance. You need to sit closer to the speaker to achieve the desired balance.

Remember:

-

Q is usually measured in decibels (dB).

-

It often varies with frequency.

-

Speaker design significantly impacts directivity.

Sabine Absorption Equivalent (α):

The Sabine absorption equivalent (α) represents the total sound-absorbing capacity of a room. It essentially measures how effectively a room absorbs sound energy and prevents reflections. A higher α value indicates greater sound absorption, leading to a shorter reverberation time (less echo) and a potentially quieter environment. Conversely, a lower α value means more reflections and a longer reverberation time, resulting in a noisier and potentially less comfortable space.

Calculating Sabine Absorption:

The total Sabine absorption of a room can be calculated by summing the product of the surface area of each material and its corresponding sound absorption coefficient (SAC).

Total Absorption (α) = Σ (Surface Area x Sound Absorption Coefficient)

Σ (sigma) represents the summation over all materials in the room.

Sound Absorption Coefficient (SAC):

The sound absorption coefficient (SAC) is a value between 0 (perfectly reflective) and 1 (perfectly absorbent) that specifies how well a particular material absorbs sound at different frequencies. Different materials have different SAC values, and understanding these values is crucial for calculating the total Sabine absorption of a room.

Appendix B: Schroeder frequency formula

Fs = 2000 * √(T/V)

Where:

Fs is the Schroeder frequency in Hertz (Hz)

T is the reverberation time of the room in seconds (s)

V is the volume of the room in cubic meters (m³)

Appendix C: TPI formula

The TPI can be estimated using various formulas, including:

Schroeder’s Formula: TPI = 0.6 * (c / f) * (1 + (d / r))

Blauert’s Formula: TPI = 1.5 * (c / f) * (1 + (d / r))^0.5

Houtgast’s Formula: TPI = 4.2 * (c / f) * (1 + (d / r))^0.4

Where:

c is the speed of sound (approximately 343 m/s)

f is the sound frequency in Hertz (Hz)

d is the distance between the sound source and the listener (in meters)

r is the radius of the listener’s head (approximately 0.0875 m)

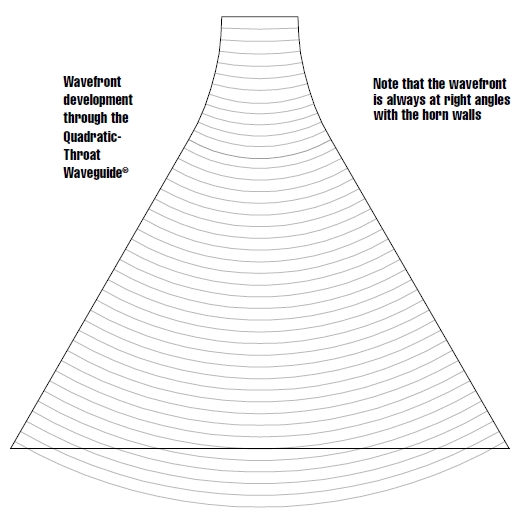

Wavefront Basics and Challenges:

Sound travels in waves, and the shape of the wavefront determines how the sound propagates in horns. Ideally, the wavefront should smoothly follow the horn’s curvature for optimal constant directivity (controlled sound dispersion).

However, horns with diagonal sections pose a challenge. A wavefront, while traveling at the speed of sound, inherently wants to maintain a 90-degree angle to its edges.

This tendency is crucial when the wavefront encounters sudden geometry changes, like those found in diagonal horn sections.

These rapid transitions can “pull” and deform the wavefront in some points, causing a phenomenon called diffraction (bending and spread out).

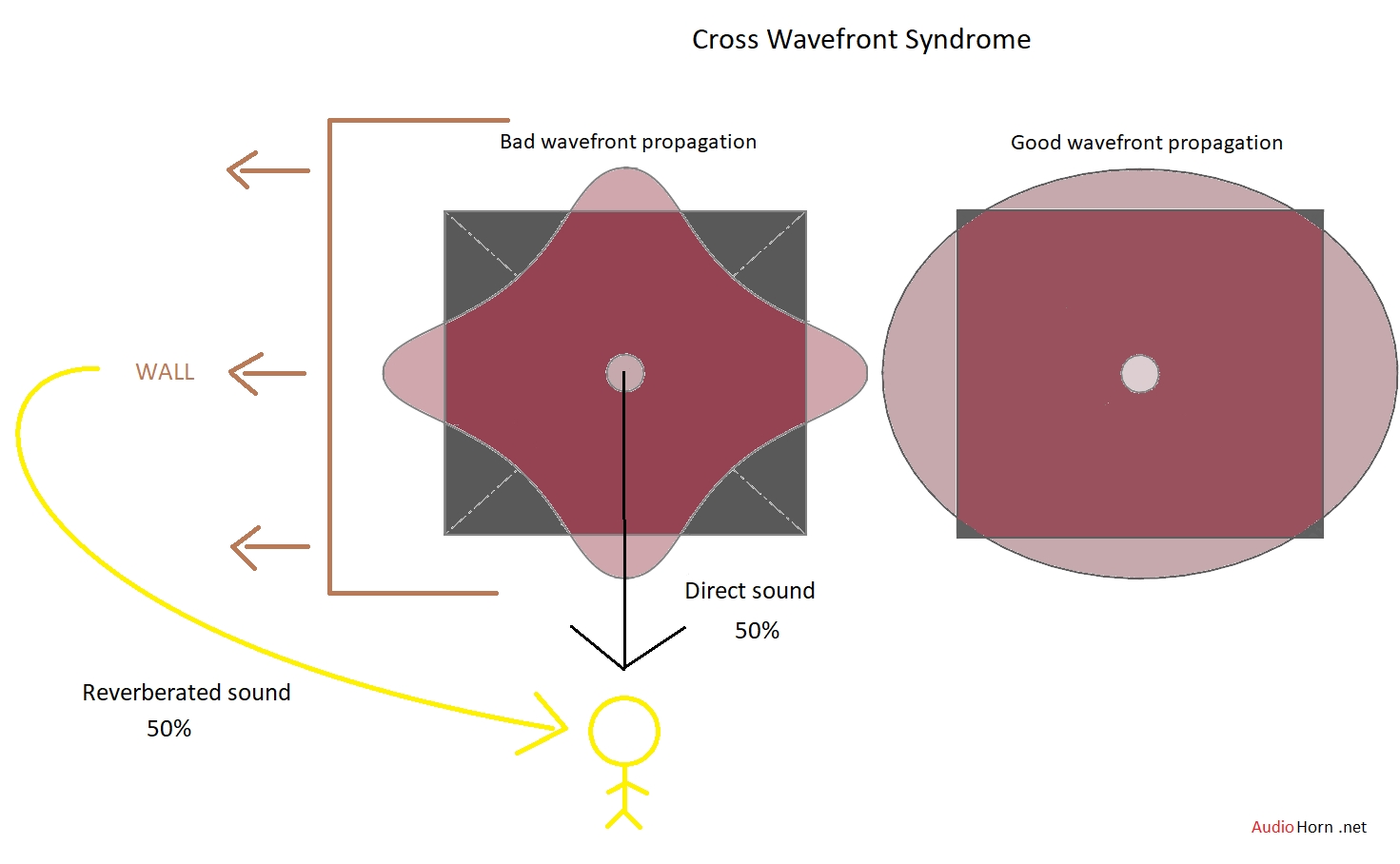

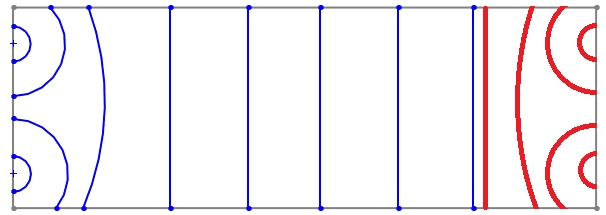

The Problem: Cross Syndrome in Horns or Waveguides

When a round waveguide transitions too quickly to a square or rectangular shape, bypassing the elliptical stage or another smooth way to do, it creates a sudden change in the wavefront’s edges expansion rate.

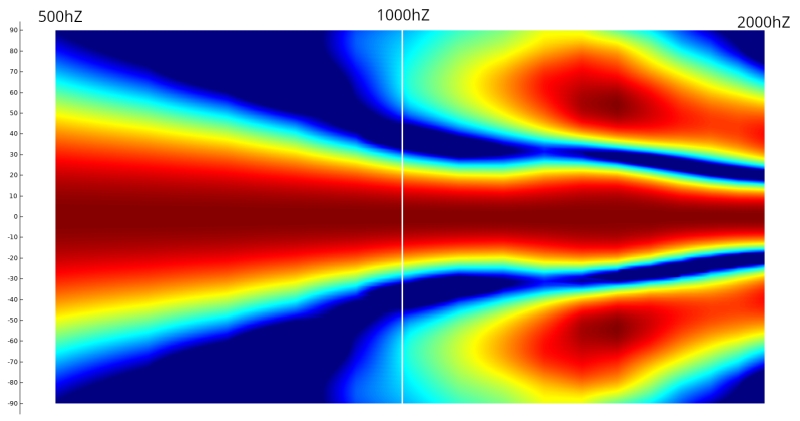

This rapid change causes diffraction primarily at the diagonals, disrupting the wavefront in high frequencies. This phenomenon can be named “cross syndrome” as the wavefront will form a cross shape.

Why We Hear It: Audibility

Even subtle changes in the direct sound caused by diffraction will be audible due to how we listen in rooms.

Most listening happens within the “critical distance” where both direct sound from the speaker and reflected sound bouncing off walls contribute significantly in a ratio close to 50/50.

This means even diffraction effects off axis become noticeable even when listening directly in front of the speakers.

These compromised wavefront are audible and present in your listening experience. This will lead to a:

-

“Floating” sensation: The compromised wavefront might seem disconnected from the rest of the soundstage, creating an unnatural spatial impression.

-

Shifting power response: As the off-axis sound degrades with increasing frequency due to diffraction, the perceived balance of the sound can change, making it seem less powerful or detailed at higher frequencies.

A proper wavefront should be round or elliptical, not cross shaped. The horn mouth is not in cause, it’s the overall profile.

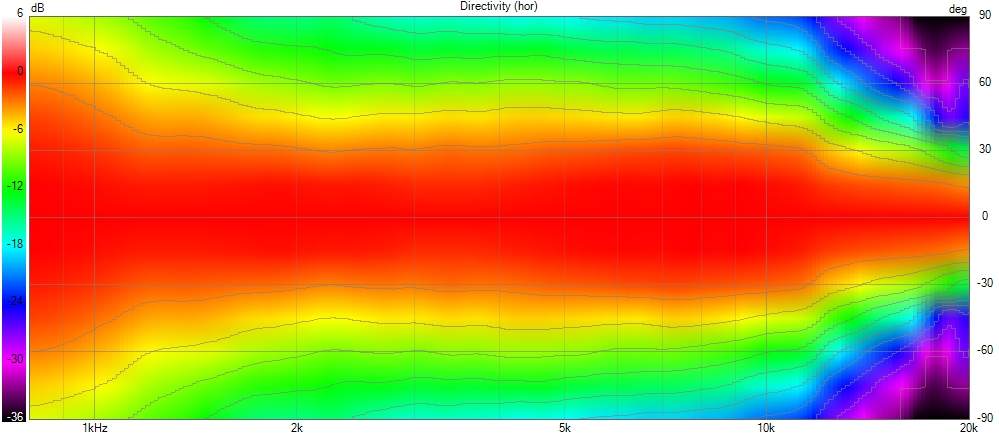

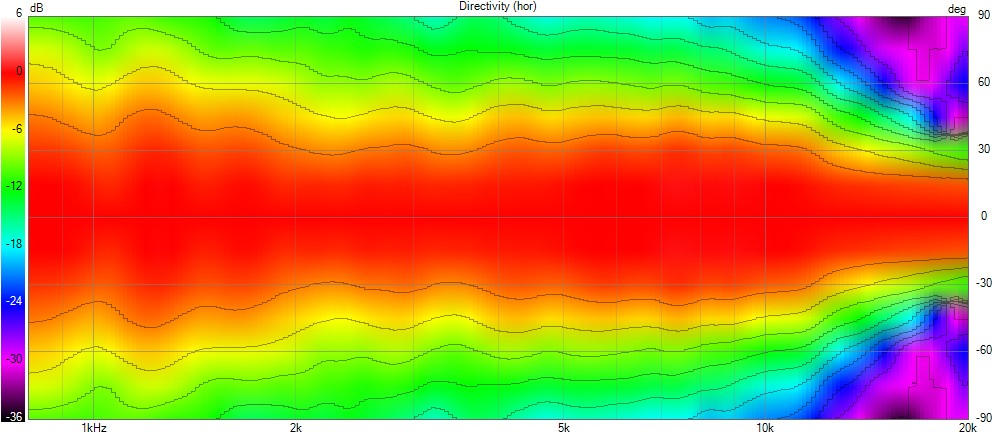

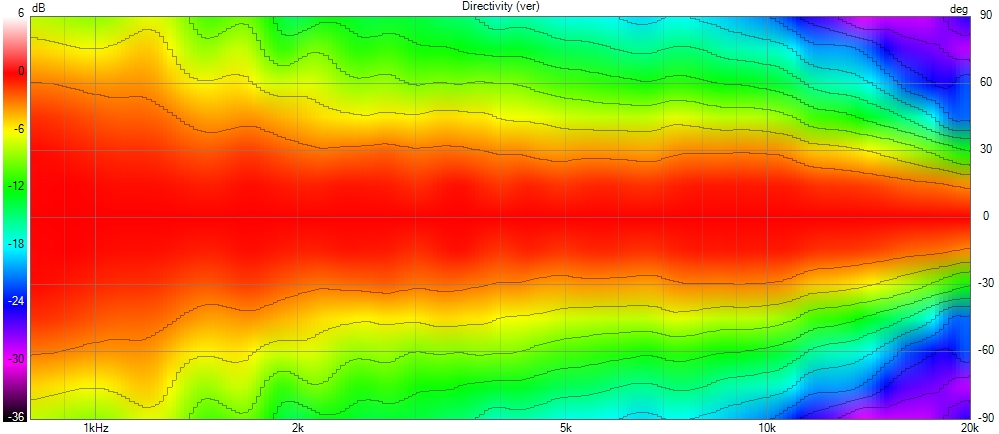

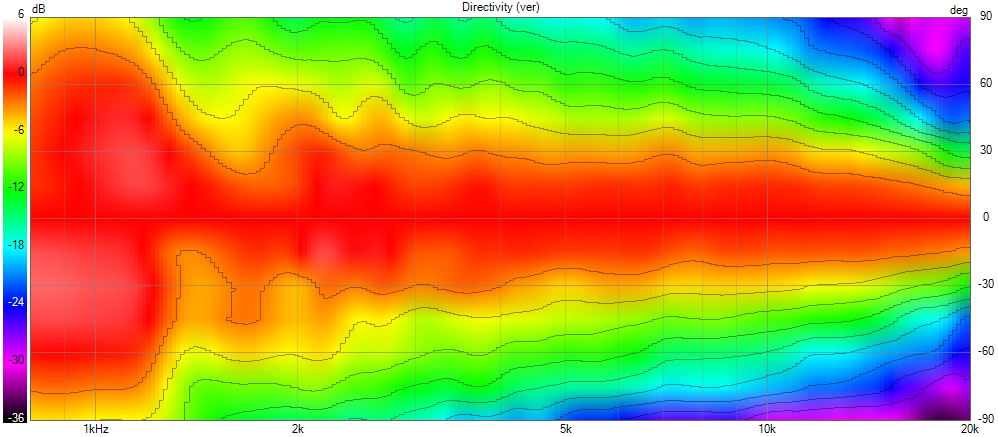

Why some measurement don’t detect it

A horizontal polar is done at a 0° vertical position, and a vertical polar is done at a 0° horizontal position. This means that the measurement is valid on two 2D planes.

Some designs that can suffer from cross wavefront syndrome, such as MEH/synergy horns, should be measured diagonally, and a diagonal polar plot should be provided.

We also need to pay attention to scale. A “half-space” option in VituixCAD displays the polar response over 180° instead of 360°. If we stay in the 360° mode, the scale is affected, and the polar response may appear more consistent than it actually is.

The same issue exists with the color scale, where using solid colors for a 2 or 3 dB range instead of a gradient can hide certain problems.

Lastly, an even simpler issue is with polar maps that start at 0 dB or use the same color for ranges like +6 dB to -2 or -3 dB (and sometimes even down to -6 dB). This completely obscures the true response of the horn.

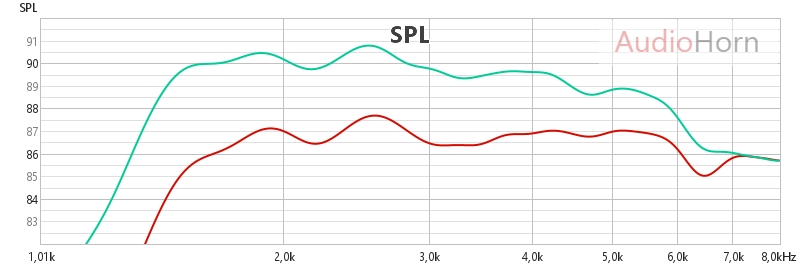

AudioHorn Solution

X-Shape horns and PureShape tweeter waveguide address this problem by focusing on the diagonal transitions.

Using advanced Finite Element Analysis (FEA), a specific diagonal profile is designed to ensure the wavefront maintains its integrity, even along these critical axes.

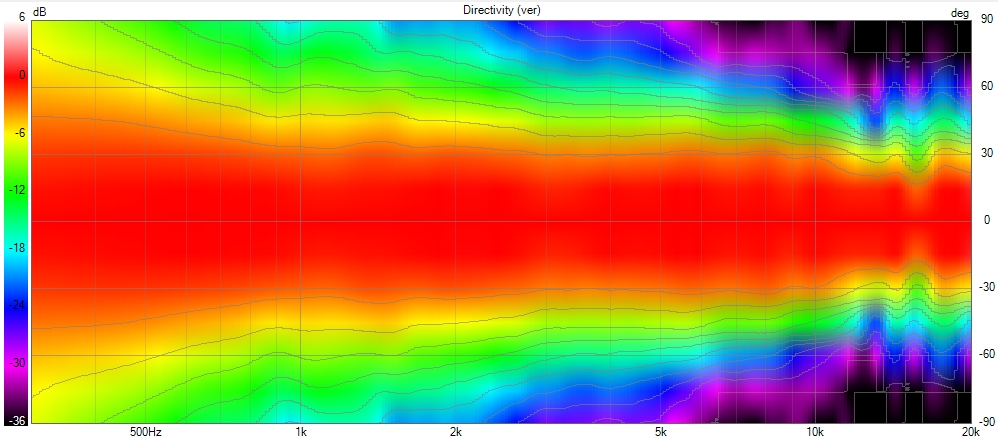

This allows the wavefront to smoothly follow the curvature without encountering drastic changes in geometry, removing diffraction as we can see here with a polar of the X-Shape diagonal (the worst place to measure a horn) :

In the world of sound manipulation, both delay and acoustic phase play a vital role, influencing how we hear and experience audio. While often used interchangeably, understanding the distinction between them is key to achieving desired sonic effects.

Delay: A Controlled Time Shift

Delay, in the context of Digital Signal Processing (DSP), refers to the intentional postponement of a sound signal by a specific time interval. Think of a sound wave reaching your ears. With delay, a copy of that wave is electronically held back for a fraction of a second before being released. This creates an echo effect, a delayed replica following the original sound.

Acoustic Phase: The Timing Within the Wave

Acoustic phase, on the other hand, is an inherent property of sound waves themselves. Every sound wave has a cyclical pattern of peaks and troughs, representing areas of high and low pressure. Acoustic phase refers to the specific point within this cycle at which the sound wave begins. Consider two identical sound waves playing simultaneously. If their peaks (or troughs) line up perfectly, they are considered “in phase.” Conversely, if one wave’s peak coincides with the other’s trough, they are “out of phase.”

The Combined Effect on Sound Perception

The interaction between delay and acoustic phase significantly influences how we perceive sound. In-phase sounds tend to reinforce each other, leading to a louder and fuller perception. Out-of-phase sounds can partially or completely cancel each other out, resulting in a quieter or thinner sound.

As an example: Two speakers playing the same sound, but one speaker experiences a slight delay compared to the other. Depending on the delay time and the specific frequencies involved, the sounds from the speakers may combine constructively (in-phase) or destructively (out of phase). This can lead to comb filtering, where certain frequencies are boosted while others are attenuated, creating an uneven tonal response.

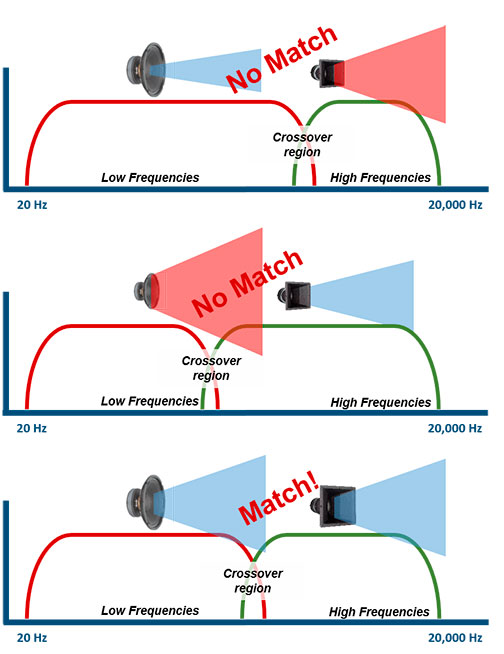

The Interplay in Crossover Design

In crossover design, both delay and acoustic phase interact to influence the sound reaching the listener. Here’s how:

-

Phase Alignment: Ideally, at the crossover frequency, the acoustic phases of the woofer and tweeter outputs should be aligned. This ensures their peaks and troughs coincide, resulting in a coherent and balanced sound.

-

Phase Shifts in Filters: Crossover filters inherently introduce phase shifts at different frequencies. By strategically adjusting the delay applied to each driver, we can compensate for these phase shifts and achieve a more linear overall phase response across the crossover region.

The Collaborative Effort

Delay and acoustic phase are distinct concepts, but in crossover design, they work together to achieve a smooth and accurate transition between drivers. By understanding their individual roles and their interaction, loudspeaker designers can optimize crossover performance for a superior listening experience.

What is “Honk” Sound

The term “honk” in audio is often associated with an undesirable coloration that gives a nasal or hollow character to the sound. This phenomenon can arise from several combined sources:

-

Throat resonances: Unintended resonances within the throat of a horn can introduce peaks in the frequency response, leading to a honky character.

-

Time-domain anomalies: A combination of throat resonance and an abrupt termination of the horn profile can create reflections and energy storage effects, resulting in temporal smearing.

-

Path length differences: A significant delay caused by a long acoustic path in the horn compared to other frequency components can lead to phase cancellation, creating irregularities at the crossover point.

-

Propagation modes in the horn: Certain horn geometries, especially older and curved designs with non-optimized profiles, can support unwanted propagation modes (cutoff modes). These modes interfere with the main signal, introducing frequency and phase anomalies that contribute to a honky sound.

-

Diaphragm breakup: Older compression driver diaphragms exhibited breakup modes at lower frequencies than modern designs, potentially contributing to a honky coloration.

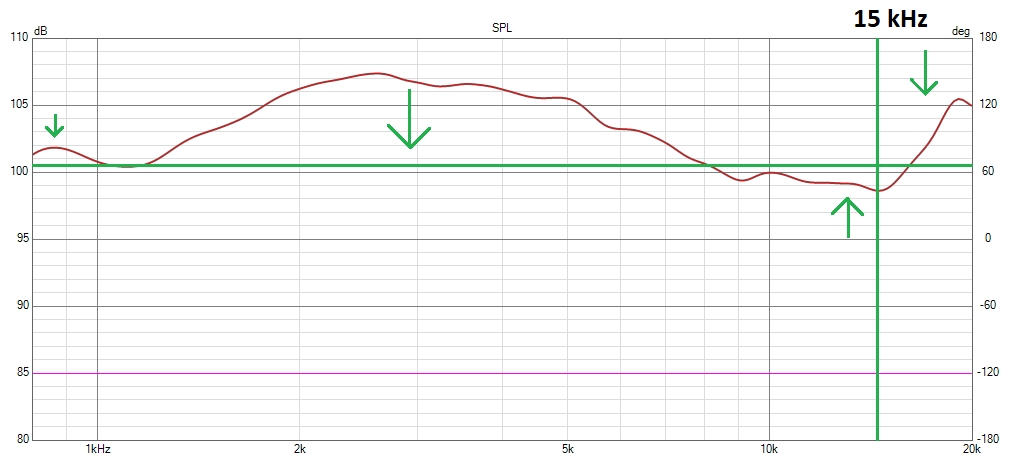

History

At the beginning of the audio industry, amplifiers were unable to deliver significant power. Early tube amplifiers could only produce a few watts:

To compensate for this limitation, the audio industry relied on high-efficiency acoustic loading — at the expense of everything else. This focus on maximizing efficiency with a very long throat came with severe trade-offs in linearity, directivity control, resonances, viscothermal losses, higher-order modes (HOM), and increased distortion at high frequencies — together, these phenomena can be perceived as “Honk”.

This approach also led to significant compromises in overall sound quality and directivity control.

A prime example is the Western Electric 15A, introduced in the 1920s:

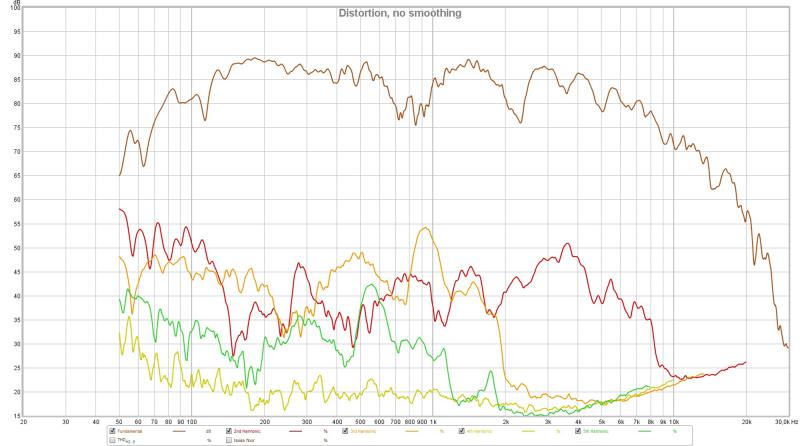

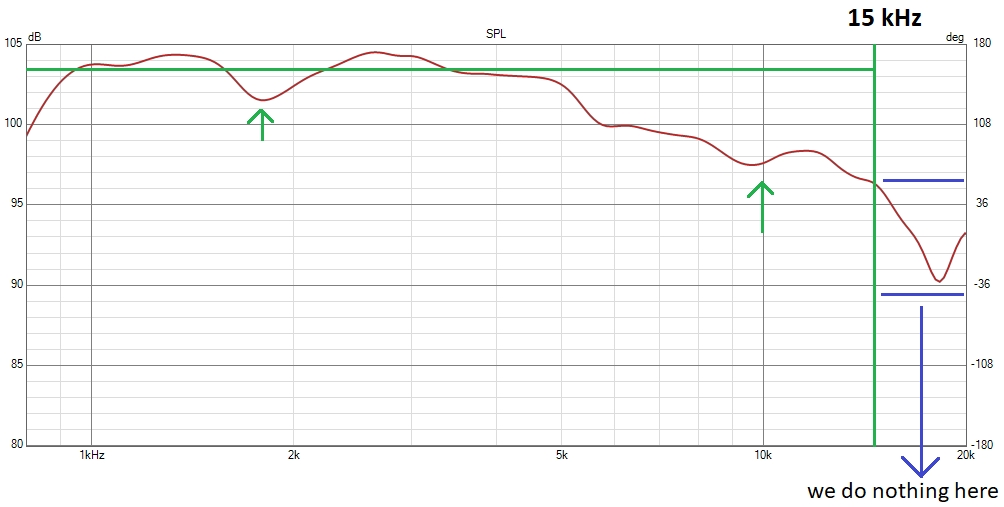

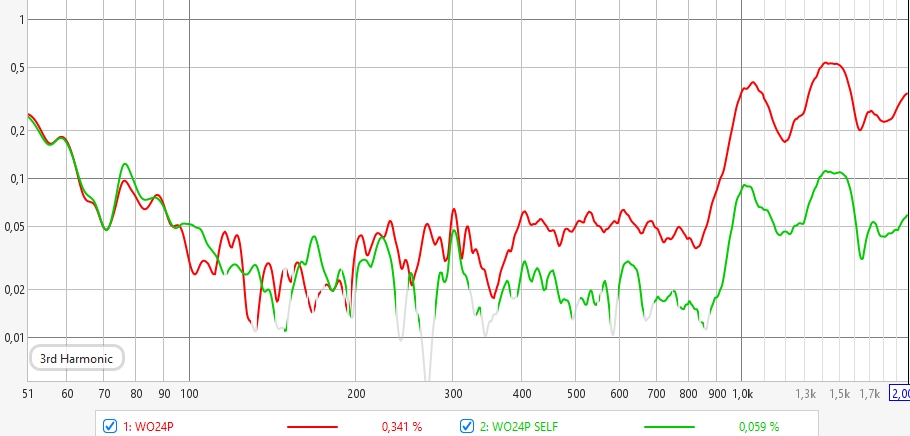

With measurement and distortion at 90dB SPL at 1m with a Lamar compression driver:

Over time, this early limitation wrongly became a defining trait of all horn-loaded systems, giving rise to a persistent and unfounded cliché. Despite decades of advancements in horn design, many still associate horns with this outdated flaw, even though modern designs have completely eliminated the causes of “honk.”

How Audio Solved It

The issues that caused the “honk” sound a century ago have been progressively eliminated thanks to technological advancements. Key improvements include:

-

Amplifier advancements: With the transition from low-power tube amplifiers to Class AB and now Class D, power is no longer a limiting factor. We can now use the adapted horn loading, optimizing horn throat design while respecting wavefront propagation.

-

Finite Element Analysis (FEA) and simulation: Modern modeling tools allow engineers to simulate wavefront behavior with precision, ensuring optimal throat shaping and loading without introducing unwanted artifacts.

-

DSP and active filtering: Digital processing has definitively solved the delay issues between components, making phase alignment and crossover transitions seamless.

-

Fluid horn profiles: These advanced geometries eliminate throat resonances, further enhancing clarity and accuracy in sound reproduction.

-

Improved compression drivers: Innovations in diaphragm materials, surround design, and phase plugs have pushed time-domain issues far beyond the range of human hearing, effectively eliminating artifacts associated with older designs.

-

Better understanding of wavefront behavior and directivity: Over the years, extensive research has deepened our knowledge of how wavefronts propagate within horns, helped by FEA. We now fully understand the importance of directivity matching between drivers to ensure a smooth frequency response tansition at crossover and avoid accidents at the crossover region.

The Role of Crossover Optimization and Directivity Matching

A common misconception is that midrange crossovers inherently degrade sound quality.

However, modern design techniques ensure that, when properly implemented, crossovers pose no issue. By precisely controlling:

- Delay

- Phase alignment

- linear SPL

- Crossover frequency chosen considering distortion and directivity matching

- Proper and stronger slope

Properly implemented systems achieve seamless transitions between drivers without introducing audible artifacts.

One crucial aspect often forgotten is directivity matching at the crossover point. If the directivity patterns of adjacent drivers do not align properly, this can lead to a perceived “empty midrange,” where energy is either lost off-axis or becomes accidented.

By carefully designing the crossover region to maintain a consistent radiation pattern, we ensure a smooth and natural transition across the frequency spectrum.

Today’s tools, such as VituixCAD and REW, allow for advanced crossover simulation, measurements and optimization of crossover design including directivity match, ensuring perfect integration between components. Thanks to these technologies, crossover points in the midrange are no longer a limitation but rather an integral part of achieving optimal performance.

Conclusion

The so-called “honk” sound was a byproduct of early horn-loaded systems, where efficiency was prioritized over all other design considerations due to amplifier power limitations. These compromises led to resonance issues, poor directivity control, and time-domain distortions that reinforced the negative perception of horns.

However, modern advancements in amplification, simulation, DSP, horn geometry, and compression driver technology have completely eliminated the root causes of “honk”. Today, properly designed horn-loaded systems benefit from optimized throat loading, controlled directivity, and seamless crossover transitions. Software like VituixCAD ensures precise phase alignment, time correction, and directivity matching in crossovers, making them transparent and free of artifacts.

In short, “honk” is a relic of the past, a problem solved long ago. Properly implemented horn designs now offer high efficiency, controlled dispersion, and accurate sound reproduction without compromise.

The outdated cliché of honky horns persists, but the reality is that modern constant directivity horn systems, when properly designed with loading capacity as we do, achieve unmatched linearity, phase coherence and neutrality, setting the benchmark for high-performance loudspeaker design.

Demystifying Directivity Match

In the world of loudspeaker design, achieving a seamless transition between different driver components is crucial for optimal sound quality.

This article delves into the intricacies of directivity matching between horns or waveguides, and woofers, a process that ensures a cohesive and natural listening experience.

The Importance of Directivity Match

When designing a loudspeaker that combines a woofer with a horn-loaded or waveguided high-frequency driver, one critical aspect is matching their directivity patterns around the crossover region.

Each direct radiation driver has its own transition frequency, the frequency at which the loudspeaker’s directivity changes from wide (omnidirectional) dispersion to narrower, more focused dispersion due to the physical size of the source. This transition frequency is roughly estimated by the formula f = c / (2πR), where R is the effective radiating radius.

Understanding Cone Radiation Directivity

There are two main stages of diaphragm – or cone driver – behavior as frequency increases: pistonic motion and non-pistonic motion.

At low frequencies, the diaphragm moves uniformly in pistonic motion, radiating sound broadly and evenly.

As frequency rises, the size of the radiating surface becomes comparable to the wavelength, causing phase differences (delays) between the diaphragm center (driven by the voice coil) and its edges. These phase shifts lead to a progressive narrowing of directivity.

This transition from pistonic to non-pistonic motion primarily results from geometric and wave propagation effects across the diaphragm surface, and it occurs even if the diaphragm material were perfectly rigid.

Material properties like stiffness and damping can also influence diaphragm behavior but have a lesser impact on directivity compared to the geometric phase effects.

This narrowing of directivity is the reason why the crossover to the high-frequency driver is usually placed around that frequency, so that both drivers have matching directivity for a smooth sound transition.

The size and shape of the baffle also affect this directivity transition.

This transition frequency f_transit can be approximated by:

f_transit ≈ c / (2 π R)

where c is the speed of wave propagation in the diaphragm material, and R its radius.

Why Directivity Match Matters

At the crossover frequency (close to the transition frequency), the directivity of both drivers should ideally match to ensure a smooth spatial response.

Without this match, response dips appear off-axis, degrading the coherence of the reproduced soundstage.

Even when listening on-axis only, the reverberated energy — shaped by off-axis radiation interacting with room acoustics — plays a significant role in the perceived sound.

This is because, at typical listening distances around the critical distance, the sound reaching the listener is approximately a 50/50 mix of direct sound from the speaker and reverberated sound reflected by the room.

If the woofer’s directivity narrows too much in the crossover region, it emits little energy off-axis in that frequency range. As a result, the room receives less energy in this midrange band, creating an energy dip in the perceived sound and a sensation of an “empty midrange.”

This interplay between directivity and room reflections makes directivity matching crucial for natural tonality, clarity, and spatial coherence.

Acoustic Center Distance

Another key factor is the physical distance between the acoustic centers of the woofer and the high-frequency driver.

Ideally, this spacing should be less than or equal to 66% of the wavelength at the crossover frequency — a topic we cover in more detail in our vertical lobing article.

Minimizing this distance helps maintain vertical consistency, reducing lobing artifacts and further reinforcing the perception of a seamless transition between drivers.

Empty Midrange Feeling

Some mid-woofer drivers, such as those from PURIFI, have excellent distortion performance. This often makes it tempting to push their usable range higher than usual.

However, as previously discussed, a woofer’s directivity narrows as frequency increases.

For example, an 8-inch woofer becomes too narrow in dispersion after around 1200–1300 Hz to blend properly with a wide-dispersion device like an AMT or a direct-radiating tweeter.

Using such a crossover point leads to a poor directivity match: at crossover, the tweeter still radiates energy widely off-axis, while the woofer no longer does.

The result is a significant mismatch in how sound is distributed in space.

This isn’t visible in the on-axis frequency response but becomes obvious in polar plots and, more importantly, audible in the room.

It can produce what many describe as an “empty midrange” — not because those frequencies are absent on-axis, but because the woofer no longer emits energy off-axis in that range.

As a result, the room does not reflect those frequencies back to the listener as it does for lower or higher bands, creating a dip in perceived energy and listener envelopment in the midrange.

In other words, it’s the interaction between the woofer’s narrowing directivity in the crossover region and the room’s reflective field that creates this perceptual gap.

Using Midrange Narrowing as a Directivity Tool

Midrange Narrowing is often perceived only as a flaw to be avoided.

However, in the specific case of woofer-to-horn transitions, it can also be deliberately used as a directivity-shaping mechanism, provided its frequency placement is carefully controlled.

For a mid-woofer, directivity naturally narrows as frequency increases due to its finite radiating diameter.

The baffle itself contributes to this behavior: a cabinet that is just wide enough to house the woofer introduces a predictable midrange narrowing related to edge diffraction.

When this narrowing is intentionally positioned near the crossover frequency, it can reinforce the woofer’s natural directivity transition.

In this configuration, the combined effect of:

- the woofer’s geometric directivity,

- and the baffle-induced midrange narrowing,

allows the woofer’s polar response to approach that of the horn at crossover, improving directivity matching.

If the baffle is made too wide, this narrowing shifts to a lower frequency, creating a mismatch as the woofer does not become directive enough and a visible directivity anomaly appears just below the crossover frequency. Conversely, a well-proportioned enclosure enables a smooth and progressive narrowing that aligns with the horn’s intended coverage.

This approach is specific to woofers and mid-woofers.

For horns, waveguides, and tweeters, midrange narrowing must instead be minimized or eliminated, as their directivity is already defined by geometry and should remain stable through the crossover region.

The Wide Perfect Match: A Myth?

The pursuit of a wide directivity match on all the bandwidth at all frequencies, can be a misguided approach.

The crossover slope, along with meticulously adjusted time delays, plays a vital role in ensuring a smooth transition between the woofer’s coverage and the horn/waveguide’s coverage. This transition should be free of abrupt changes (on-axis or off-axis) to avoid unwanted coloration or distortions in the sound.

Moreover, a common misconception in modern loudspeaker design is prioritizing an exact 120-degree directivity match at crossover and with all frequencies on the depends of more important aspects like coverage adapted to listening distance.

This “one-120°-coverage-fits-all” approach leads to completely ignores psyckoacoustics principles and coverage adapted to distance as see in critical distance article.

Improved Directivity with Round-Over Returns

Our horns incorporate a design element called a “round-over return.” This feature enhances directivity control by mitigating the narrowing effect call midrange narrowing/beaming that occur in the midrange frequencies.

The round-over return is a smooth, curved transition that seamlessly follows the horn’s profile until it meets the side of the enclosure. This design minimizes disruptions to the wavefront, preventing unwanted narrowing of the sound dispersion pattern in the midrange.

Benefits of Round-Over Returns:

-

Improved Directivity: By eliminating midrange narrowing, the round-over return ensures a more consistent and predictable directivity pattern across the entire frequency spectrum.

-

Smoother Sound Transition: The smooth curvature of the return minimizes potential phase cancellations and contributes to a more natural and seamless transition between the horn and the enclosure.

-

Enhanced Off-Axis Response: The improved directivity control translates to a more consistent tonal balance thanks to coherent power response (the fusion of direct and reverberated field as seen upper), for listeners positioned both on and off-axis from the speaker.

Directivity Matching Between Horns: A Different Approach

When dealing with multiple horns or waveguides in a loudspeaker design, the crossover approach differs slightly. Here, the ideal crossover point is not where each component loses its directivity, but rather where both maintain a constant directivity pattern. This constant directivity should be consistent not only at the crossover frequency but also one octave below and one octave above the crossover point.

Following these principles of directivity matching ensures a natural and cohesive sonic experience for the listener, free from unwanted artifacts and with a smooth transition between different driver components within a loudspeaker.

When sound travels from your speakers, it bounces off nearby surfaces. Reflections from the floor and ceiling, in particular, can significantly impact the overall sound you hear. These reflections arrive slightly later than the direct sound from the speaker, creating a phenomenon known as comb filtering.

Comb filtering essentially adds and subtracts certain frequencies at specific points in the listening area. This can lead to uneven tonal balance, with some frequencies being boosted and others being cancelled out.

How Directivity Affects the Bounce

The way a speaker distributes sound (directivity) plays a big role in how susceptible it is to floor and ceiling bounce.

Speakers with narrow vertical dispersion tend to excite less the rebound, minimizing the impact of reflections from above and below.

Total Early Reflection (TER) and the Room Equation

While speaker manufacturers typically provide a frequency response curve showing a speaker’s output at different frequencies, this is usually measured in an anechoic chamber – a special environment designed to absorb sound reflections. In a real room, reflections from the floor, ceiling, and walls significantly impact the sound you hear.

Total Early Reflection (TER) is a crucial concept that helps us understand this real-world sound experience. TER specifically measures the combined effect of the first reflections that arrive at the listening position after bouncing off nearby surfaces. These early reflections can significantly alter the tonal balance and overall clarity of the sound.

Evolutionary Adaptation and the Human Auditory System

Interestingly, our brains have likely adapted to compensate for the effects of room acoustics to some extent. For millennia, since the days of Homo sapiens living in caves, our auditory systems have been processing sound in environments with reflections.

This means our brains may be able to partially “correct” for some of the coloration caused by floor and ceiling bounce.

Crossover Design and Minimizing Interference

Another factor to consider is speaker crossover design. A crossover is a circuit that divides the audio signal into different frequency ranges and directs them to the appropriate speaker drivers (woofer, tweeter, etc.).

When the crossover frequencies are close to the resonant frequencies caused by floor bounce, it can be use, by looking power response, to diluate the bounce effect by taking account in crossover design.

Imagine two drivers in a speaker – one positioned higher and one positioned lower. If they are reproducing similar frequencies at the same time, but one is slightly delayed due to its distance from the floor (floor bounce), they can interfere with bounce effect differently.

Crossover design can plays a role in minimizing the impact of floor bounce. While the height difference between drivers can cause slight phase delays, a well-designed crossover can address this. By analyzing the power response, the crossover can be designed to “dilute” the bounce effect, reducing unwanted reflections but it shouldn’nt be done at the expense of putting mid-woofer/woofer as close at possible of tweeter section.

Taming the Bounce: Achieving Optimal Listening

By understanding the effects of floor and ceiling reflections, directivity, power response, and even our own evolutionary adaptations, we can achieve a more balanced and enjoyable listening experience in any room. Here are some tips:

-

Speaker placement: Experiment with speaker positioning relative to the walls and your listening area. Even a few inches can make a difference.

-

Room treatments: Consider acoustic panels or diffusers to absorb or scatter sound reflections, especially if your room has hard, reflective surfaces.

-

Understanding crossover design: While not directly controllable by the listener, being aware of how crossover design can minimize interference due to floor bounce can be helpful when choosing speakers but it must not be done on the depend of the point below.

-

Lower crossover frequency: As mentioned earlier, a lower crossover encourages the woofer to handle a wider range of frequencies at lower wavelengths. This promotes better directivity at the lower frequencies most susceptible to floor bounce, reducing the overall energy bouncing around the room.

-

Narrower vertical dispersion: Speakers with wider vertical dispersion spread the sound out more, which can actually increase reflections off the floor and ceiling. Conversely, speakers with narrower vertical dispersion tend to focus the sound more towards the listening area, minimizing the interaction with reflective surfaces above and below. It’s one of the raison that the coverage should be choosen according to listening distance and acoustic.

Introduction

The way a loudspeaker is mounted — free-standing or flush-mounted in a wall — has a major influence on its directivity behavior, particularly around the crossover region.

Proper directivity matching ensures that the woofer is crossed over when it becomes sufficiently directive, while the horn or waveguide is crossed over when it starts to lose its controlled directivity, creating a smooth and coherent transition between the two drivers.

An oversized cabinet or a rigid in-wall installation can widen radiation at the crossover frequency, making proper directivity matching with a horn extremely difficult, and can degrade directivity control below this region until wavelengths become sufficiently large.

This article focuses on understanding these mechanisms and identifying practical solutions.

1. Free-Standing Case

Smooth horn and waveguide design

For horns and waveguides, the profile must be smooth and continuous along the axis of wave propagation.

Rounded edges and the absence of abrupt depth changes ensure a consistent progression of the wavefront and stable directivity, midrange narrowing must be avoided.

Woofer directivity and baffle size

In the case of a woofer, its directivity can approximate that of a similarly sized horn at crossover if the enclosure is just large enough to house the driver.

This is the key design principle: the cabinet should not be oversized. By limiting the baffle width, the resulting midrange narrowing occurs at the intended frequency,

allowing it to cooperate with the woofer’s natural directivity transition.

A properly sized baffle ensures that:

- The midrange narrowing occurs where the woofer starts to become directive,

- The woofer’s polar response aligns smoothly with the horn’s controlled directivity at crossover,

- Directivity match is optimized without introducing wavefront distortions or frequency shifts.

If the baffle is made too large, the midrange narrowing shifts to a lower frequency, the woofer does not become directive enough at the intended crossover, and optimal directivity matching with the horn becomes impossible.

Wavefront distortions with oversized baffles

At mid and high frequencies, an oversized baffle distorts the wavefront leaving the driver.

Part of the sound propagates at very shallow angles along the baffle surface (quasi-lateral wave), while abrupt edges excite evanescent near-field components.

Their interaction with the main propagating wave alters the overall wavefront, resulting in uneven radiation and degraded directivity

until the wavefront becomes dominated by wavelengths larger than the baffle, at which point these distortions gradually vanish.

Matching woofer and horn directivity

The directivity of a mid-woofer evolves with frequency:

- At low frequencies, radiation is wide and close to omnidirectional.

- As frequency increases, directivity narrows progressively as the effective radiating diameter becomes limiting.

By ensuring the baffle is just large enough for the woofer, the midrange narrowing occurs at the intended frequency, cooperating with the woofer’s natural directivity transition.

This allows the woofer’s polar response to blend smoothly with the horn’s directivity at the crossover, achieving a predictable and coherent directivity match.

2. In-Wall Case

Directivity behavior in wall installations

When a loudspeaker is flush-mounted in a rigid wall, radiation is confined to a half-space (180° hemisphere, or less in corners).

This increases output at very low frequencies, but around the crossover region it often creates a severe directivity mismatch.

In this configuration, the woofer’s radiation becomes wider than in free-standing conditions and typically wider than the horn’s controlled directivity (often around 90°), resulting in an uneven directivity index transition.

Note: An in-wall installation does not remove the need to aim the speaker toward the listening position.

The front wall must be shaped accordingly; a flat wall is not sufficient.

Wavefront behavior with a hard wall

When a waveguide is flush-mounted in a rigid wall, the wavefront does not detach cleanly into space.

Three contributions coexist:

- the main propagating wave,

- a quasi-lateral wave traveling along the wall surface,

- evanescent near-field components excited by opening edges (including those of the woofer itself).

Their interference alters the phase and curvature of the wavefront, producing irregular radiation and directivity anomalies.

The observed directivity anomalies in rigid in-wall installations are caused by the combined interaction of propagating and non-propagating wave components near the mounting surface.

Evanescent near-field components are generated at sharp impedance discontinuities such as waveguide terminations, driver apertures, and cabinet or woofer edges. Although these components do not propagate into the far field on their own, they modify the local pressure and particle velocity distributions near the wall. When coupled to a rigid boundary, they can partially convert into propagating energy at larger distances, altering the effective phase and curvature of the radiated wavefront.

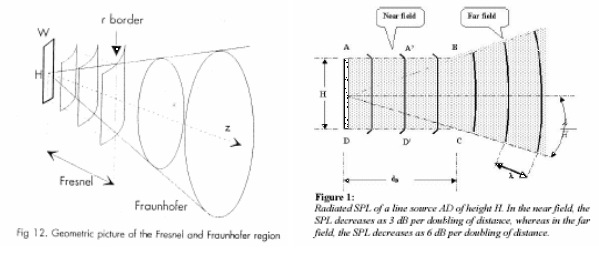

In addition, the woofer’s initial wavefront contributes to these anomalies. Its near-field radiation is not a well-defined planar wave; local pressure and velocity variations, arising from diaphragm motion, geometry, and driver-edge discontinuities, coexist with a chaotic, quasi-reactive region immediately in front of the driver, a non-radiative zone where the acoustic field is not yet fully propagated, with pressure and particle velocity out of phase, and where energy is partially stored rather than radiated efficiently.

Note: this aspect also makes designing a woofer waveguide challenging, because the waveguide sees the near-field wavefront before it reaches the Fresnel region, where the front is highly non-uniform and varies with frequency.

In an in-wall installation, these irregularities interact with the evanescent components and quasi-lateral waves, amplifying directivity anomalies even when the cabinet front is flush.

In parallel, quasi-lateral waves are excited along the rigid wall surface due to the strong acoustic impedance contrast between air and the boundary. These waves are neither purely standing waves nor free-field radiation. They are guided surface-related modes that transport energy laterally before re-radiating into the listening space. Their delayed and frequency-dependent re-radiation interferes with the main wavefront, producing lobing, angular irregularities, and non-monotonic directivity index behavior.

These effects become most pronounced when the natural directivity of the radiator becomes narrower than the hemispherical radiation imposed by the wall. In this regime, the wavefront cannot detach cleanly from the surface, and the wall enforces an artificially wide radiation pattern that contradicts the source’s inherent directivity. The result is not simple midrange beaming or diameter-related narrowing, but an unstable transition dominated by boundary-induced wave phenomena.

Moreover, the rigid wall forces the woofer to radiate over a wider angle near the crossover region. As a result, its directivity can exceed that of the horn at the crossover frequency, making the transition region prone to off-axis irregularities.

As frequency decreases and wavelengths become much larger than the source, these effects progressively vanish and radiation approaches ideal half-space behavior at very low frequencies.

The constricted radiation gain provided by the wall, from 4π to 2π for example, occurs only when the natural radiation of the driver is wider than the available aperture of the wall, typically at low frequencies.

When the natural directivity at a given frequency becomes narrower than the hemispherical radiation (~180°) imposed by the mounting surface, the wavefront must detach and radiate freely into half-space.

If the wavefront cannot detach properly at that point, the wall forces an artificially wide radiation pattern, reintroducing the very mechanisms described above: quasi-lateral waves, evanescent near-field components, and severe directivity irregularities.

Solution: Acoustic absorber wall

For stable directivity, the wavefront must detach and radiate freely into half-space at the appropriate frequency.

This is achieved by using absorptive or porous materials around the horn and a relatively thin woofer enclosure, providing an acoustic impedance closer to that of air.

Such treatment suppresses lateral wave propagation and evanescent components, leaving only the main propagating wave to define directivity.

The front wall should be flush with the enclosure and acoustically absorptive, which not only stabilizes directivity, but also improves the room’s acoustic behavior.

It will also help, as in a free-standing ideal implementation (see above), to preserve the directivity match between woofer and horn, as the wavefront can beam naturally and combine with the woofer’s inherent directivity to reach the horn’s radiation at the intended frequency.

If this cannot be achieved, a free-standing configuration respecting proper baffle dimensions will generally yield better results, as the room or wall cannot reliably act as a horn except in the very low frequency range.

Conclusion

Mounting conditions have a critical impact on loudspeaker directivity, particularly around crossover frequencies.

Free-standing designs with well-proportioned baffles allow controlled use of midrange narrowing for directivity matching, while in-wall installations require careful absorptive treatment to avoid severe radiation anomalies.

Horn Loading and Efficiency

Horn loading is a technique used in loudspeakers to improve efficiency by shaping how sound is transferred from the driver to the surrounding air. This article explores the different aspects of horn loading, its frequency dependence, and its relationship with directivity and wavefront geometry.

We will expose technical considerations necessary for a full understanding of the phenomenon, then present a conclusion.

Acoustic Impedance

Acoustic impedance (Z) is defined as the ratio of sound pressure (Pa) to particle velocity (v):

Z = Pa/v

It characterizes how easily an acoustic wave propagates through a medium or across a boundary between two media. In practice, acoustic impedance measures the adaptation between two acoustic environments, such as a loudspeaker coupling into a room, or a compression driver coupling into a horn.

Acoustic impedance is not directly audible. Instead, it governs how much of the acoustic energy is transmitted versus reflected at a boundary. A well-matched acoustic impedance maximizes energy transfer and minimizes unwanted reflections.

In horn-loaded loudspeakers:

- At low frequencies, where the wavelength is larger than the horn dimensions, the horn does not load efficiently and the acoustic impedance mismatch leads to reduced efficiency.

- At mid frequencies, the horn improves the impedance match, thereby boosting efficiency.

- At high frequencies, the impedance tends to rise again due to smaller wavelengths and internal viscous losses, reducing the overall gain.

Additionally, directivity impacts impedance: as a source becomes more directional (naturally or by design), the radiated impedance increases. Horns use this effect intentionally to “concentrate” the energy, but it must be controlled to avoid creating too much mismatch at the horn mouth.

Finally, acoustic impedance at the horn throat typically increases with frequency, and a very high impedance combined with high particle velocity can lead to thermal compression and distortion at high SPL levels.

With a horn featuring a smooth roundover at the mouth to avoid midrange narrowing, the acoustic impedance at the horn’s mouth approaches that of free air—very low and nearly linear—while the horn geometry progressively transforms this impedance towards the throat, ensuring efficient coupling with the driver.

Acoustic impedance is a complex quantity, comprising both resistive (dissipative) and reactive (energy-storing) components.

The horn influences both parts, but the reactive load is especially important as it temporarily stores and releases acoustic energy, shaping the frequency response and directivity of the system.

Reactive Loading

Reactive loading refers to the non-dissipative component of acoustic impedance — the portion that stores, rather than dissipates, energy.

In an acoustic system such as a horn, certain elements — like the confined volume of air near the throat — behave somewhat like a spring or a mass, temporarily storing energy in the form of potential or kinetic energy without dissipating it.

This reactive impedance resists rapid changes in pressure or displacement and varies with frequency.

At low frequencies, the confined air acts as an acoustic compliance (spring), increasing the total acoustic impedance and improving energy transfer from the driver to the air.

At high frequencies, this effect decreases, as the wavelength becomes shorter and the confined air no longer behaves as a significant reactive load.

In short, reactive loading is the frequency-dependent acoustic impedance caused by the storage of elastic energy in the air near the throat (and other regions), which helps match the transducer to free air — especially at low frequencies.

Particle Velocity

Particle velocity (v) is the oscillating motion of air particles caused by a sound wave. It differs from the speed of sound (celerity), as it describes the back-and-forth movement without any net displacement.

In audio systems:

- High particle velocity typically occurs in constricted areas, such as bass reflex ports, narrow ducts, or horn throats.

- At constant sound pressure, higher particle velocity means lower impedance.

- In general, as the cross-section narrows, the particle velocity rises, even if the frequency or SPL remains constant.

Thus, particle velocity must be carefully monitored when designing small apertures or highly compressed structures to avoid excessive velocities that cause distortion and energy losses; controlling it properly helps minimize nonlinear distortion and maximize power handling.

These nonlinear effects extend beyond simple harmonic distortion — they also generate intermodulation distortion, which arises from the interaction of multiple frequencies. Intermodulation distortion particularly degrades clarity and precision, adversely affecting the perceived fidelity of the sound.

The particle velocity within the throat limits the maximum power the horn can handle. This is why compression horns often feature throats with an optimized diameter — to control particle velocity, reduce turbulence and flow losses due to viscous and thermal boundary layer effects at the throat walls, and prevent the flow from exceeding the critical Mach threshold.

However, modern phase plugs allow for much higher compression ratios by ensuring equal impedance across each acoustic path.

This design distributes particle velocity more evenly, reducing local velocity peaks and enabling greater power handling without increased distortion, or allowing a smaller throat size to extend directivity control to higher frequencies without sacrificing maximum power.

Critical Mach Number

The critical Mach number describes when the particle velocity becomes a significant fraction of the speed of sound (Mach 1). In loudspeaker design, this is particularly relevant in narrow passages or throats where particle velocity can increase substantially.

The Mach number is defined as:

M = v/c

Where:

- v is the particle velocity,

- c is the speed of sound in the medium (≈ 343 m/s in air).

At a Mach number of around 0.1 to 0.3, the acoustic behavior starts to become nonlinear. Exceeding this critical Mach number results in:

- Strong nonlinearities (distortion),

- Turbulent flow,

- Thermal compression (heating due to intense localized motion),

- Potential efficiency loss and audible degradation.

Keeping particle velocity well below the critical Mach number ensures low distortion and optimal efficiency in horn-loaded designs.

For ports, we have developed a flat velocity port that allows us to keep the Mach number low.

How These Concepts Interact with Frequency

The interaction between reactive loading, acoustic impedance, particle velocity, and the Mach number depends strongly on frequency.

-

At lower frequencies, reactive loading dominates. The confined air near the horn throat acts as an acoustic compliance, temporarily storing energy and improving energy transfer from the driver to the air. Sound waves have longer wavelengths, which results in lower particle velocities, reducing the risk of reaching the critical Mach number. Horn-loaded systems generally handle these frequencies efficiently, with minimal distortion or turbulence, benefiting from the positive effect of reactive loading.

-

At higher frequencies, wavelengths become shorter and particle velocity increases more rapidly. In narrow openings, such as the throat of a horn or the port of a bass reflex system, the risk of exceeding the critical Mach number rises. This can cause distortion, thermal compression, and reduced efficiency.

In practice, these effects occur mainly at very high SPLs or with extremely narrow throats. Modern phase plugs and impedance-optimized designs help manage airflow, reducing peak particle velocities and mitigating nonlinearities, while still allowing efficient sound radiation.

Proper impedance matching remains essential in horn design to minimize reflections and ensure optimal energy transfer. At higher frequencies, impedance tends to increase at the throat due to viscous and thermal losses, which can partially offset the efficiency gains from the horn.